Heading section

Open-source code and projects

We periodically release all of our source code as open-source projects on GitHub. All these codes are under the GPLv3 licence, please cite the corresponding articles accordingly

You can find more information on our team GitHub website: http://www.github.com/acids-ircam

Visit each team members GitHub page for more information

________________________________________________________

Heading section

vschaos

vschaos is an open-source Python library for variational neural audio synthesis. This library, based on <a href="https://pytorch.org/">pytorch</a>, allows high-level functions for creating, training, and using many variants of variational auto-encoding.

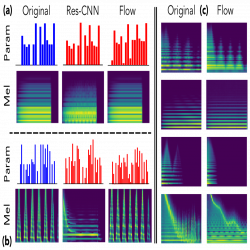

Flow synthesizer

Sound synthesizers are pervasive in music and they now even entirely define new music genres. However, their complexity and sets of parameters renders them difficult to master. We created an innovative generative probabilistic model that learns an invertible mapping between a continuous auditory latent space of a synthesizer audio capabilities and the space of its parameters. We approach this task using variational auto-encoders and normalizing flows Using this new learning model, we can learn the principal macro-controls of a synthesizer, allowing to travel across its organized manifold of sounds, performing parameter inference from audio to control the synthesizer with our voice, and even address semantic dimension learning where we find how the controls fit to given semantic concepts, all within a single model.

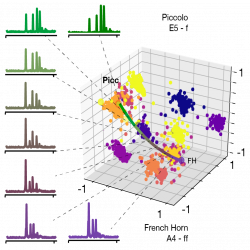

Generative timbre spaces

Timbre spaces have been used to study the relationships between different instrumental timbres,based on perceptual ratings. However, they provide limited interpretability, no generative capabilityand no generalization. Here, we show that variational auto-encoders (VAE) can alleviate these limitations, by regularizing their latent space during training in order to ensure that the latent space of audio follows the same topology as that of the perceptual timbre space. Hence, we bridge audio analysis, perception and synthesis into a single system.

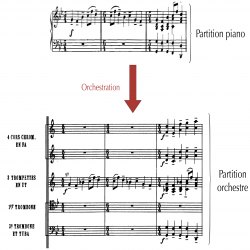

Orchestral piano

We recently developed the first live orchestral piano (LOP) system, a real-time automatic projective orchestration project. The system provides a way to compose music with a full classical orchestra in real-time by simply playing on a MIDI keyboard. Given piano score, the objective is to be able to automatically generate an orchestration.