Heading section

Highlighted papers

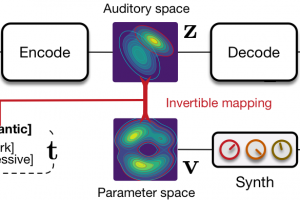

Flow synthesizer

Sound synthesizers are pervasive in music and they now even entirely define new music genres. However, their complexity and sets of parameters renders them difficult to master. We created an innovative generative probabilistic model that learns an invertible mapping between a continuous auditory latent space of a synthesizer audio capabilities and the space of its parameters. We approach this task using variational auto-encoders and normalizing flows Using this new learning model, we can learn the principal macro-controls of a synthesizer, allowing to travel across its organized manifold of sounds, performing parameter inference from audio to control the synthesizer with our voice, and even address semantic dimension learning where we find how the controls fit to given semantic concepts, all within a single model.

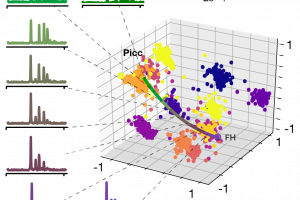

Generative timbre spaces

Best presentation award at ISMIR 2019

Timbre spaces have been used to study the relationships between different instrumental timbres,based on perceptual ratings. However, they provide limited interpretability, no generative capabilityand no generalization. Here, we show that variational auto-encoders (VAE) can alleviate these limitations, by regularizing their latent space during training in order to ensure that the latent space of audio follows the same topology as that of the perceptual timbre space. Hence, we bridge audio analysis, perception and synthesis into a single system.

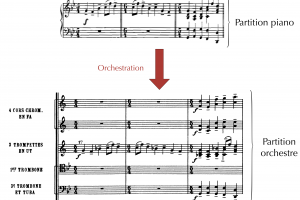

Live Orchestral Piano

We recently developed the first live orchestral piano (LOP) system. The system provides a way to compose music with a full classical orchestra in real-time by simply playing on a MIDI keyboard.Our approach is to perform statistical inference on a corpus of midi files. This corpus contains piano scores and their orchestration by famous composers.This objective might seem too ambitious : learning orchestration through the mere observation of scores ? I We believe that by observing the correlation between piano scores and corresponding orchestrations made by famous composers, we might be able to infer the spectral kwnoledge of composers. The probabilistic models we investigate are neural networks with conditional and temporal structures.

________________________________________________________

Full references

Bitton, A., Esling, P., Caillon, A., & Fouilleul, M. Assisted Sound Sample Generation with Musical Conditioning in Adversarial Auto-Encoders. Proceedings of the DaFX Conference 2019 arXiv preprint arXiv:1904.06215. (2019) [pdf]

Doras, G., Esling, P., & Peeters, G. On the use of u-net for dominant melody estimation in polyphonic music. IEEE International Workshop on Multilayer Music Representation, Milano, Italy, pp. 66-70 (2019) [pdf]

Chemla-Romeu-Santos, A., Ntalampiras, S., Esling, P., Haus, G., & Assayag, G. (2019, September). Cross-Modal Variational Inference For Bijective Signal-Symbol Translation. In Proceedings of the 22 nd International Conference on Digital Audio Effects (DAFx-19). [pdf]

Scurto, H., & Chemla-Romeu-Santos, A. (2019, October). Machine Learning for Computer Music Multidisciplinary Research: A Practical Case Study. In 14th International Symposium on Computer Music Multidisciplinary Research (CMMR’19). [pdf]

Esling, P. Chemla-Romeu-Santos, A. Bitton, A. “Bridging audio analysis, perception and synthesis with perceptually-regularized variational timbre spaces”, Proceedings of the 19th International Society for Music Information Retrieval, vol. 1, Paris, France. AR: 23,2% Best paper award. (2018) [pdf]

Bitton, A., Esling, P., & Chemla-Romeu-Santos, A. (2018). Modulated Variational Auto-Encoders for many-to-many musical timbre transfer. arXiv preprint arXiv:1810.00222. [pdf]

Crestel, L. Esling, P. Ghisi, D. Meier, R. Generating orchestral music by conditioning SampleRNN, Timbre 2018 Conference: Timbre is a many-splendored thing. Montreal, 07/2018.[pdf]

Bitton, A. Chemla-Romeu-Santos, A. & Esling, P. Timbre transfer between orchestral instruments with semi-supervised learning. Timbre 2018 Conference: Timbre is a many-splendored thing, Montreal, 07/2018. [pdf]

Esling, P., Chemla-Romeu-Santos, A., & Bitton, A. “Generative timbre spaces: regularizing variational auto-encoders with perceptual metrics”. Proceedings of International DaFX conference, pp.23-27, Portugal (2018) [pdf]

Carsault, T., Nika, J., & Esling, P. Using musical relationships between chord labels in automatic chord extraction tasks. In International Society for Music Information Retrieval Conference (ISMIR), Paris, France, (2018). [pdf]

Cordier, T. Esling, P. Lejzerowicz, F. Visco, J. Ouadahi, A. Martins, C. “Predicting the ecological quality status of marine environments from eDNA metabarcoding data using supervised machine learning”, Environmental science & technology 51 (16), 9118-9126. Impact Factor: 6.653 (2017) [pdf]

Talbot, P. Agon, C. & Esling, P. “Interactive computer-aided composition with constraints” Proceedings of the 43rd International Computer Music Conference (ICMC 2017) [pdf]

Crestel, L., Esling, P., Heng, L., & McAdams, S. A database linking piano and orchestral midi scores with application to automatic projective orchestration. Proceedings of the International Symposium for Music Information Retrieval. 321, Suzhou, China (2017) [pdf]

Crestel, L. Esling, P. “Live Orchestral Piano, a system for real-time orchestral music generation.” In 14th Sound and Music Computing Conference, vol. 1, pp. 434. (2017) [pdf]

Esling P. Lejzerowicz F. & Pawlowski J. “Accurate multiplexing and filtering for high-throughput amplicon sequencing” Nucleic Acids Research, vol. 34, Issue 5, pp. 2153-2524. Impact Factor: 8.055 (2015) [link]

Esling P. Agon C., “Multiobjective time series matching and classification” IEEE Transactions on Speech Audio and Language Processing, vol. 21, no. 10, pp. 2057–2072. Impact Factor: 1.675 (2013) [link]

Esling P. Agon C. "Time series data mining", ACM Computing Surveys, vol. 45 (1), Impact Factor: 4.543. (2012) [pdf]

Esling, P. Carpentier, G., & Agon, C. “Dynamic musical orchestration using a spectro-temporal description of musical instruments”. In Lecture Notes in Computer Science, vol. 6025, pp. 371-380 (2010) [link]

Hackbarth B., Schnell N. Esling P., Schwarz D. “Composing Morphology: Concatenative Synthesis as an Intuitive Medium for Prescribing Sound in Time”, Contemporary Music Review, vol.32, no. 1. (2012) [link]

Scholar profiles

Philippe Esling

Léopold Crestel